Unethical online experiments risk real world harm

Roula Khalaf, Editor of the FT, selects her favourite stories in this weekly newsletter.

Hatim A Rahman is an assistant professor of management and organisations at the Kellogg School of Management, Northwestern University, in Illinois

You are probably being experimented on right now. Organisations run countless tests online, attempting to learn how they can keep our eyeballs glued to the screen, convince us to buy a new product, or elicit a reaction to the latest news. But they often do so without letting us know — and with unintended, and sometimes negative, consequences.

In a recent study, my colleagues and I examined how it is possible for a digital labour platform to determine your next job, pay, and visibility to prospective employers. Such experiments are often conducted without worker consent or awareness — and they are widespread.

In 2022, a different study reported in the New York Times had found that professional networking platform LinkedIn experimented on millions of users without their knowledge. These tests had a direct effect on users’ careers, the authors claimed, with many experiencing fewer opportunities to connect with prospective employers.

Uber has also experimented with fare payments, which many drivers told media outlets led to reduced earnings. Social media platforms’ tests have contributed to the polarisation of online content and the development of “echo chambers”, according to research in the journal, Nature. And Google constantly experiments with search results, which German academics found was putting spam websites at the top of its results.

The problem is not experimentation in itself, which can be useful to help companies make data-driven decisions. It is that most do not have any internal or external mechanisms to ensure that experiments are clearly beneficial to their users, as well as themselves.

Countries also lack strong regulatory frameworks to govern how organisations use online experiments and the spillover effects they can have. Without guardrails, the consequences of unregulated experimentation can be disastrous for everyone.

In our study, when workers found themselves unwilling guinea pigs, they expressed paranoia, frustration and contempt at having their livelihoods subject to experimentation without knowledge and consent. The consequences cascaded and affected their income and wellbeing.

Some refused to offer ideas for how the digital platform could improve. Others stopped believing any change was real. Instead, they sought to limit their online engagement.

The impact of unregulated online experimentation is likely to become still more widespread and pronounced.

Amazon has been accused by US regulators of using experiments to drive up product prices, stifle competition, and increase user fees. Scammers use online and digital experimentation to prey on elderly and vulnerable people.

And, now, generative artificial intelligence tools are lowering the cost of producing content for digital experimentation. Some organisations are even deploying technology that could allow them to experiment with our brain waves.

The increased integration of experimentation represents what we call the “experimental hand” — which can have powerful effects on workers, users, customers and society, in ways that are ill-understood but can have severe consequences. Even with the best of intentions, without multiple checks and balances, the consequences of the current culture can be disastrous for people and society.

But we do not have to embrace a Black Mirror future in which our every move, interaction and thought is subject to exploitative experimentation. Organisations and policymakers would be wise to learn the lessons from scientists’ mistakes from half a century ago.

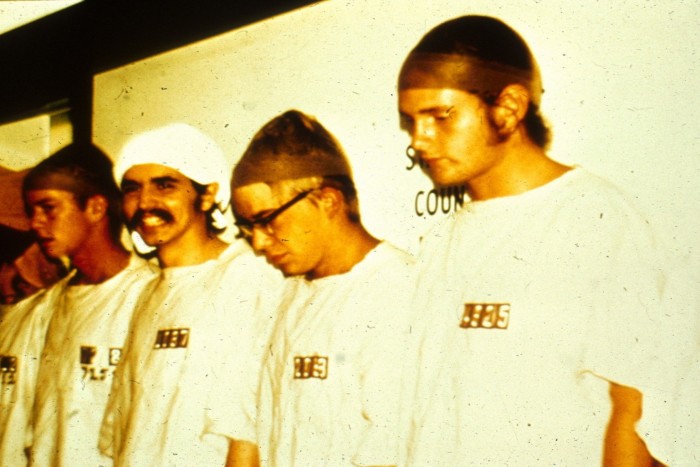

The infamous 1971 Stanford Prison Experiment, in which the university psychology professor Philip Zimbardo randomly assigned participants to a prisoner or prison guard role, quickly descended to guards subjecting prisoners to egregious psychological abuse.

Despite observing these consequences, he did not stop the experiment. It was PhD student Christina Maslach, who had come to help conduct interviews, who voiced strong objections and contributed to its closure.

The lack of supervision over the experiment’s design and implementation accelerated the adoption of Institutional Review Boards (IRBs) at universities. Their purpose is to ensure that every experiment involving human subjects is conducted ethically and complies with the law, including obtaining informed consent from subjects and allowing them to opt-out.

For IRBs to function beyond academia, organisation leaders must ensure they include independent experts with diverse expertise to impose the highest ethical standards.

But this is not enough. Facebook’s notorious 2012 experiment in which it measured how users reacted to changes in the positive or negative posts in their feed was approved by Cornell University’s IRB. The social media platform claimed its users’ agreement to the terms of service constituted informed consent.

FT Online MBA ranking 2024 — 10 of the best

Find out which schools are in our ranking of Online MBA degrees and read the rest of our coverage at ft.com/reports/online-mba.

We also need crowdsourced accountability to ensure organisations implement ethically robust experiments. Users, themselves, are often the closest, most informed people to provide input. A diverse group should have a voice in any experiment’s design.

If organisations are unwilling to responds to users’ demands, then those exposed to experiments could create their own platforms to keep each other informed. Workers on outsourcing platform Amazon Mechanical Turk, known as MTurk, for example, formed Turkopticon to create a crowdsourced rating system of employers when MTurk refused to provide them with ratings.

It should not take another Zimbardo experiment to spur organisations and governments to institute guardrails for ethical experimentation. Nor should they simply wait for regulators to act. Maslach didn’t delay, and neither should we.

Tim Weiss, assistant professor of innovation and entrepreneurship at Imperial College London, and Arvind Karunakaran, assistant professor of management science and engineering at Stanford University, contributed to this article

Comments