Getting the facts straight on online misinformation

Roula Khalaf, Editor of the FT, selects her favourite stories in this weekly newsletter.

The growth in misleading rhetoric from US congressional candidates on topics such as election integrity has put renewed pressure on social media platforms ahead of November’s vote. And the perception that tech companies are doing little to fight misinformation raises questions about their democratic obligations and poses commercial risks. But, perhaps surprisingly, recent initiatives suggest that platforms may be able to channel partisan motivations to democratise moderation.

One explanation for platforms’ seemingly tepid response is the conflicting pressure companies face from critics. Seven out of 10 US adults — and most experts — see misinformation as a “major problem” and believe internet companies should do more to curb its spread. Yet prominent Republican politicians have called moderation “censorship”, and threatened to pass legislation curbing the ability of platforms to self-regulate. Regulation poses serious challenges for the business model of social media companies, as does the loss of users who are disillusioned by the sense that either misinformation or political bias is running rampant.

How can social media companies thread the needle of engaging in meaningful moderation while escaping accusations of partisan bias and censorship? One potential solution that platforms have begun to test is to democratise moderation through crowdsourced fact-checking. Instead of relying solely on professional fact-checkers and artificial intelligence algorithms, they are turning to their users to help pick up the slack.

This strategy has many upsides. First, using laypeople to fact-check content is scalable in a way that professional fact-checking — which relies on a small group of highly trained experts — is not. Second, it is cost-effective, especially if users are willing to flag inaccurate content without getting paid. Finally, because moderation is done by members of the community, companies can avoid accusations of top-down bias in their moderation decisions.

FT Executive MBA Ranking 2022

Find out which schools are in our ranking of EMBA degrees. Also learn how the table was compiled and read the rest of our coverage at ft.com/emba.

But why should anyone trust the crowd to evaluate content in a reasonable manner? Research led by my colleague Jennifer Allen sheds light on when crowdsourced evaluations might be a good solution — and when they might not.

First, the good news. One scenario we studied was when laypeople are randomly assigned to rate specific content, and their judgments are combined. Our research has found that averaging the judgments of small, politically balanced crowds of laypeople matches the accuracy as assessed by experts, to the same extent as the experts match each other.

This might seem surprising, since the judgments of any individual layperson are not very reliable. But more than a century of research on the “wisdom of crowds” has shown how combining the responses of many non-experts can match or exceed expert judgments. Such a strategy has been employed, for example, by Facebook in its Community Review, which hired contractors without specific training to scale fact-checking.

However, results are more mixed when users can fact-check whatever content they choose. In early 2021, Twitter released a crowdsourced moderation programme called Birdwatch, in which regular users can flag tweets as misleading, and write free-response fact-checks that “add context”. Other members of the Birdwatch community can upvote or downvote these notes, to provide feedback about their quality. After aggregating the votes, Twitter highlights the most “helpful” notes and shows them to other Birdwatch users and beyond.

Despite the lofty intentions, a new study by our team found that political partisanship is a major driver of users’ engagement on Birdwatch. They overwhelmingly flag and fact-check tweets written by people with opposing political views. They primarily upvote fact-checks written by their co-partisans, and grade those of counter-partisans as unhelpful. This practice rendered Birdwatchers’ average helpfulness scores nearly meaningless as a way to select good fact-checkers, as scores were driven mostly by who voted rather than on the note’s actual contents.

Here’s the pleasant surprise, though: although our research suggests politics is a major motivator, most tweets Birdwatchers flagged were indeed problematic. Professional fact-checkers judged 86 per cent of flagged tweets misleading, suggesting the partisan motivations driving people to participate are not causing them to indiscriminately flag counter-partisan content. Instead, they are mostly seeking out misleading posts from across the aisle. The two sides are somewhat effectively policing each other.

These investigations leave the platforms with several important takeaways. First, crowdsourced fact-checking can be a potentially powerful part of the solution to moderation problems on social media if deployed correctly.

Second, channelling partisan motivations to be productive, rather than destructive, is critical for platforms that want to use crowdsourced moderation. Partisanship appears to motivate people to volunteer for fact-checking programmes, which is crucial for their success. Rather than seeking to recruit only the rare few who are impartial, the key is to screen out the small fraction of zealots who put partisanship above truth.

Finally, more sophisticated strategies are needed to identify fact-checks that are helpful. Because of the partisan bias exhibited by ratings, it is not enough to simply average helpfulness scores. Developing such strategies is currently a primary focus of the Birdwatch team.

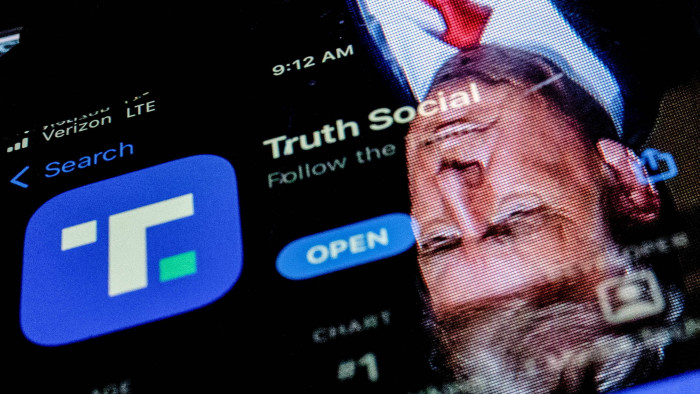

Companies would probably love to eschew the thorny practice of content moderation entirely. However, Truth Social, Donald Trump’s new “uncensored” social media platform, has shown the perils of dodging the issue. Misinformation and threats of domestic terrorism have abounded, causing the Google Play store to block the app. Moderation is necessary for platforms to survive, lest they be overrun with dangerous rumours and calls to violence. But engaging users in that moderation process can help platforms satisfy critics from both sides — identifying misinformation at scale, while avoiding claims of top-down bias. Thus, there is a strong business case for platforms investing in crowdsourced fact-checking.

Combating misinformation is a challenge requiring a wide range of approaches. Our work suggests that an important route for social media companies to save democracy from misinformation is to democratise the moderation process itself.

David Rand is the Erwin H Schell professor at MIT and co-author of the article ‘Birds of a feather don’t fact-check each other’

Comments